`BOOKINGAPI is designed to book hotels in real time as fast as in two steps. It covers the complete booking process; it allows generating lists of hotels, confirming bookings, getting lists of bookings, obtaining booking information, making cancellations and modify existing bookings.` `BOOKINGAPI works in combination with CONTENTAPI to obtain content information from the hotels, such as pictures, description, facilities, services, etc. Please refer to the ContentAPI documentation and IO/DOCS for related information.` `BOOKINGAPI has been designed for a two steps confirmation, but due the the complexity of client and providers systems a third method has been designed.`

This is Frontend API for the users

BokaBord API for showing restaurant information and handling reservations.

The BlueInk API v2 is the second major release of the BlueInk eSignature API.

This is a generated connector for [Google Blogger API v3.0](https://developers.google.com/blogger/docs/3.0/getting_started) OpenAPI specification. The Blogger API provides access to posts, comments and pages of a Blogger blog.

This is the specification of the bLink 'account-information-service' module API. (as implemented by SIX and used by clients (e.g. third party providers))

API Documentation: https://developer.blackboard.com/portal/displayApi This documentation provides you with Blackboard REST API endpoint requirements, sample JSON, request parameters, and response messages.

This is a generated connector for [Bitbucket API v2.0](https://developer.atlassian.com/bitbucket/api/2/reference/) OpenAPI Specification. Code against the Bitbucket API to automate simple tasks, embed Bitbucket data into your own site, build mobile or desktop apps, or even add custom UI add-ons into Bitbucket itself using the Connect framework.

This is a generated connector for [Bitly API v4.0.0](https://dev.bitly.com/api-reference) OpenAPI specification. Bitly is the most widely trusted link management platform in the world. By using the Bitly API, you will exercise the full power of your links through automated link customization, mobile deep linking, and click analytics.

Code against the Bitbucket API to automate simple tasks, embed Bitbucket data into your own site, build mobile or desktop apps, or even add custom UI add-ons into Bitbucket itself using the Connect framework. Contact Support: Name: Bitbucket Support Email: support@bitbucket.org

This is a generated connector for [Bing Autosuggest API v1](https://www.microsoft.com/en-us/bing/apis/bing-autosuggest-api) OpenAPI specification. "The Autosuggest API lets partners send a partial search query to Bing\ \ and get back a list of suggested queries that other users have searched on.\ \ In addition to including searches that other users have made, the list may include\ \ suggestions based on user intent. For example, if the query string is weather\ \ in Lo, the list will include relevant weather suggestions.\r\n<br><br>\r\nTypically,\ \ you use this API to support an auto-suggest search box feature. For example,\ \ as the user types a query into the search box, you would call this API to populate\ \ a drop-down list of suggested query strings. If the user selects a query from\ \ the list, you would either send the user to the Bing search results page for\ \ the selected query or call the Bing Search API to get the search results and\ \ display the results yourself.\r\n"

This documentation describes the Binary24 platfrom REST API, which was developed for users to connect their applications to automate their strategies. This documentation describes connecting to our backend system by authenticating using Bearer OAuth2 token. Contact Support: Name: No Contact Email: email@example.com

Binance official supported Postman collections.<br/> - API documents: https://binance-docs.github.io/apidocs/futures/en/ - Telegram: https://t.me/binance_api_english - Open Issue at: https://github.com/binance-exchange/binance-api-postman

This Open API specification serves as a standardized framework for describing and defining the capabilities of the BGL Guest Track. It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

Binance official supported Postman collections.<br/> - API documents: https://binance-docs.github.io/apidocs/spot/en/#change-log - Telegram: https://t.me/binance_api_english - Open Issue at: https://github.com/binance-exchange/binance-api-postman

This is a generated connector for [Google BigQuery Data Transfer API v2.0](https://cloud.google.com/bigquery-transfer/docs/reference/datatransfer/rest) OpenAPI specification. The BigQuery Data Transfer API schedule queries or transfer external data from SaaS applications to Google BigQuery on a regular basis.

Binance official supported Postman collections.<br/> - API documents: https://binance-docs.github.io/apidocs/futures/en/ - Telegram: https://t.me/binance_api_english - Open Issue at: https://github.com/binance-exchange/binance-api-postman

Binance official supported Postman collections.<br/> - API documents: https://binance-docs.github.io/Brokerage-API/General

This is a generated connector for [Google BigQuery API v2.0](https://cloud.google.com/bigquery/docs/reference/rest) OpenAPI specification. The BigQuery API provides access to create, manage, share and query data.

This is a generated connector from [BINTable API v1](https://bintable.com/get-api) OpenAPI Specification. BIN lookup API, the free api service from bintable.com to lookup card information using it's BIN. The service maintains updated database based on the comunity and other third party services to make sure all BINs in the database are accurate and up to date.

# 👋 Introduction Belvo is an open finance API for Latin America that allows companies to access banking and fiscal information in a secure as well as agile way. Through our API, you can access: - **Banking information,** such as account statements, real-time balance, historical transactions, and account owner identification. - **Fiscal information,** such as received and sent invoices and tax returns. - **Employment information**, such as number of employers, base salary, and social security contributions. - **Enriched data points**, using our Enrichment API, you can gain insights to regarding your user's income, recurring expenses, and risk factors. Our Postman Workspace will let you interact with all the main resources so that you can interact with and test out our API. # 🤩 Documentation For our full documentation, please see: - Our [DevPortal](https://developers.belvo.com/docs) (contains handy guides on how to implement Belvo) - Our [API reference](https://developers.belvo.com/reference/using-the-api-reference) (detailed documentation on the requests and responses of all our endpoints.) # ▶️ Getting started Make sure that you also check out our Developer Portal for guides on: - [How to get started in five minutes](https://developers.belvo.com/docs/get-started-in-5-minutes) - [Using our Sandbox environment](https://developers.belvo.com/docs/test-in-sandbox) as well as: - [How to integrate the widget](https://developers.belvo.com/docs/connect-widget) - [Use our quickstart application](https://developers.belvo.com/docs/quickstart-application) # 👀 Watch and Fork us! > **⚠️ Warning: Credential Use** Please make sure that you make use of Postman's Environment Variables to store any sensitive parameters, most importantly your API keys or Cookie ID. Follow our [Setting Up Postman guide](https://developers.belvo.com/docs/test-with-postman) to see how to do this. If you'd like to use our collection: 1. Click "Watch" on the collection. 2. Fork the collection. You'll get a notification whenever we update the collection and you'll be able to pull in all the changes, making sure you're working with the most up-to-date copy every time.

SmartDocs Web API

This Open API specification serves as a standardized framework for describing and defining the capabilities of the Bifrost OCC. It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

This Open API specification serves as a standardized framework for describing and defining the capabilities of the BentoWeb. It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

This is a generated connector from [Azure Analysis Services API v2017-08-01](https://azure.microsoft.com/en-us/services/analysis-services/) OpenAPI specification. The Azure Analysis Services Web API provides a RESTful set of web services that enables users to create, retrieve, update, and delete Analysis Services servers

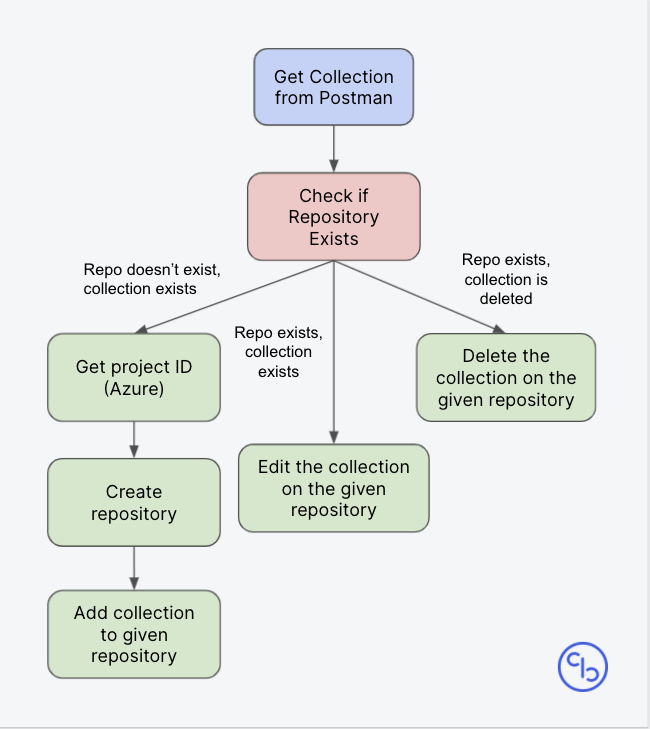

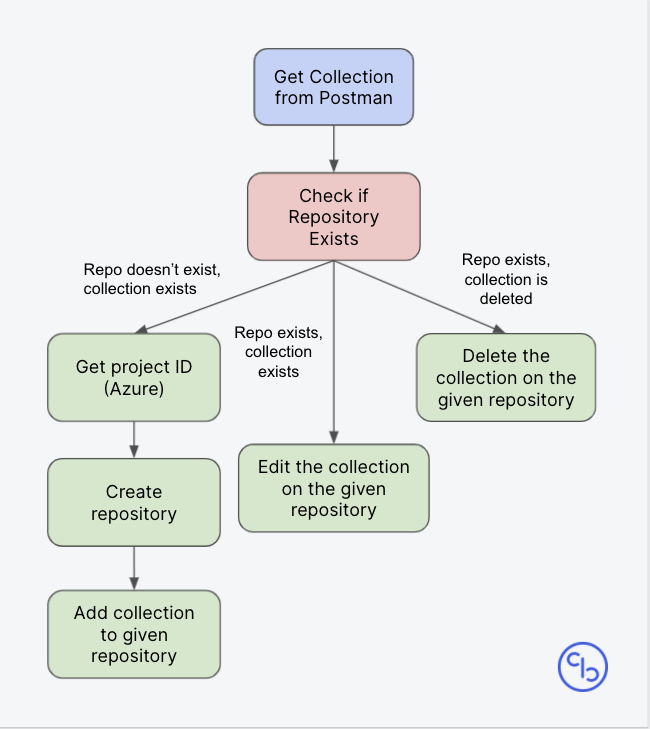

# Introduction This collection is used to create a repository in Azure Repos, upload a Postman collection to that repository, and commit collection changes to that repository. The intended functionality of this integration is for each copy of this integration to run a separate monitor on a distinct collection that the user wants uploaded to Azure repos. This integration, when paired up with a monitor for a specific collection, can be used to track that specific collection across multiple branches and repos by stating the branch name and repo name in the environment for that monitor. **Note**: This integration does not support renaming files or directories after they have been committed to Azure Repos. If the user would like to change the current `fileName` or `directoryName` to something different after the initial commit to a branch has been made, the user will have to start with a new branch. This integration also does not support pushing to repositories that have been manually created or edited in Azure Repos because manual changes cause the auto-generated variables to not be up-to-date. In order to prevent these errors, please create repositories and make commits through this integration, rather than manual changes. # Prerequisites This collection requires that you have done the following: - Made an account in Azure DevOps - Created an organization in your DevOps account - Created a project in your DevOps organization - Generated a Personal Access Token in your DevOps project (can be generated by going to User Settings, clicking Personal Access Tokens, and clicking New Token) # Monitors In the integration, a monitor is used to automatically check to update the changes made to a collection once a day. In order to make this monitor, input the desired Monitor Name, select the ***Azure Repos Integration*** collection as the Collection. Select the ***Azure Repos*** environment as the environment and select how often you want it to be run to check if your collection has been updated (we recommend once a day). Then, your monitor should be created ([learn more about creating a monitor here](https://learning.postman.com/docs/designing-and-developing-your-api/monitoring-your-api/setting-up-monitor/#:~:text=When%20you%20open%20a%20workspace,Started%2C%20then%20Create%20a%20monitor.)). # Mocks If you would like to see example responses and run and interact with the collection without having to input valid credentials, you will need to create a mock server. You can do this by left clicking on your forked version of this integration and selecting ***Mock Collection*** ([learn more about creating a mock server here](https://learning.postman.com/docs/designing-and-developing-your-api/mocking-data/setting-up-mock/)). Then you will also nee to fork the ***[MOCK] Azure Repos*** environment. Copy the mock server url and assign it to the environment variable ***mockUrl***. If you run the collection with the mock environment selected, it will show you what a successful request looks so long as you fill out the environment variables with the correct variable type. # Workflow Diagram  # Environment Setup To use this collection, the following environment variables must be defined: | Key | Description | Kind | |---|---|---| | `x-api-key` | Your Postman API key ([learn more about the Postman API here](https://learning.postman.com/docs/developer/intro-api/) and [get your Postman API key here](https://go.postman.co/settings/me/api-keys)) | User input | | `collectionUID` | The ID of the Postman collection that you want to upload to Azure Repos (found under the info tab of the collection) | User input | | `collectionContent` | The content of the Postman collection that will be uploaded to Azure Repos | Auto-generated | | `organization` | The name of the organization that you created in your DevOps account | User input | | `project` | The name of the project that you created in your DevOps organization | User input | | `password` | The Personal Access Token that you generated in your DevOps project | User input | | `repositoryId` | The name of the repository that you want to upload to in Azure Repos | User input | | `previousCommitId` | The ID of the previous commit made to the given repository and branch | Auto-generated | | `fileName` | The name of the collection that will be uploaded to Azure Repos | User input or Auto-generated if not specified| | `directoryName` | The name of the directory that commits will be made to in Azure Repos | User input or Auto-generated if not specified | | `branch` | The branch that you want to commit changes to in Azure Repos (default is refs/heads/main if none provided) | User input or Auto-generated if not specified| | `projectId` | The ID of the project that you created in your DevOps organization | Auto-generated | | `changeType` | The type of change that you are making when you make a commit to Azure Repos. Supported change types include: add, edit, delete | Auto-generated | | `baseCollection` | The base URL used to retrieve a Postman collection | Auto-generated | | `baseRepo` | The base URL used to make all calls to Azure Repos | Auto-generated | | `base64Collection` | The base-64 converted version of the Postman collection with the given UID | Auto-generated | **Note**: If the Create Repo and Push Collection requests are run with a branch or repo that has not been created, a new variable with the key "{{Repository Name}}/{{Branch Name}}/previousCommitId" will be auto-generated. For example, if a newly created repository with the name "Testing" has the branch name "refs/heads/main", a new variable with the key "Testing/main/previousCommitId" will appear, which will be used in the Push Collection Request. These variables allow the user to fluidly transition between branches and repositories and are auto-generated, so the user does not have to worry about manually changing these variables. Additionally, when the Get Collection request is run with a branch or repo that has not been created, a new variable with the key "{{Repository Name}}/{{Branch Name}}/collectionContent" will be auto-generated. These variables allow the integration to upload different Postman collections to the given branch and repository names. Furthermore, if the Get Collection request fails because a Postman collection is deleted, the corresponding file on Azure Repos is deleted. To track whether the file has been deleted, a new variable with the key "{{Repository Name}}/{{Branch Name}}/collectionDeleted" will be auto-generated in the environment. When there is a commit to create a file, this variable will be set to "Not Deleted". If the Delete Collection request is run, this variable will be set to "Deleted". # Branches To specify a particular branch that you want to push to, fill in the `branch` environment variable using the format "refs/heads/{{Branch Name}}". For example, if you wanted to push to a branch named "second", `branch` would be set to "refs/heads/second".

This is a generated connector for [BeezUP Merchant API v2.0](https://api-docs.beezup.com/swagger-ui/) OpenAPI specification. BeezUP Merchant API provides capability to read and write BeezUP data such as accounts, stores, product catalog, channel search etc.

This Open API specification serves as a standardized framework for describing and defining the capabilities of the Batory Foods. It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

This is a generated connector for [Azure DataLake Storage (Gen2) API version 2019-10-31](https://azure.microsoft.com/en-us/solutions/data-lake/) OpenAPI specification. Azure Data Lake Storage provides storage for Hadoop and other big data workloads.

# Introduction A suite of Azure integrations for website management (includes collections for API Management, Active Directory B2C, and Blob Storage). #### Forking Collections In order to use this integration, you will need to fork this collection. You can do this by left clicking on a collection and pressing create a fork. You will also need to fork the environment named ***Azure Website Management Integration*** ([learn more about forking collections and environments here](https://learning.postman.com/docs/collaborating-in-postman/version-control-for-collections/#forking-a-collection)). # Monitors In the integration, a monitor is used to continually check to see if the API schema has been updated (so we can make sure that only the recent update of the API schema has been uploaded to Azure) In order to make this monitor, input the desired Monitor Name, select the ***Azure Website Management Integration*** collection as the Collection. Select the *** Azure Website Management*** environment as the environment and select how often you want it to be run to check if your schema has been updated. (we recommend once a day) Then your monitor should be created. ([learn more about creating a monitor here](https://learning.postman.com/docs/designing-and-developing-your-api/monitoring-your-api/setting-up-monitor/#:~:text=When%20you%20open%20a%20workspace,Started%2C%20then%20Create%20a%20monitor.)) # Mocks If you would like to see example responses and run and interact with the collection without having to input valid credentials, you will need to create a mock server. You can do this by left clicking on your forked version of this integration and selecting ***Mock Collection*** ([learn more about creating a mock server here](https://learning.postman.com/docs/designing-and-developing-your-api/mocking-data/setting-up-mock/)). Then you will also nee to fork the ***[MOCK] Azure Website Management Integration*** environment. Copy the mock server url and assign it to the environment variable ***mockUrl***. If you run the collection with the mock environment selected, it will show you what a successful request looks so long as you fill out the environment variables with the correct variable type.

This Open API specification serves as a standardized framework for describing and defining the capabilities of the Banasan. It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

# Introduction A suite of Azure integrations for website management (includes collections for API Management, Active Directory B2C, and Blob Storage). #### Forking Collections In order to use this integration, you will need to fork this collection. You can do this by left clicking on a collection and pressing create a fork. You will also need to fork the environment named ***Azure Website Management Integration*** ([learn more about forking collections and environments here](https://learning.postman.com/docs/collaborating-in-postman/version-control-for-collections/#forking-a-collection)). # Monitors In the integration, a monitor is used to continually check to see if the API schema has been updated (so we can make sure that only the recent update of the API schema has been uploaded to Azure) In order to make this monitor, input the desired Monitor Name, select the ***Azure Website Management Integration*** collection as the Collection. Select the *** Azure Website Management*** environment as the environment and select how often you want it to be run to check if your schema has been updated. (we recommend once a day) Then your monitor should be created. ([learn more about creating a monitor here](https://learning.postman.com/docs/designing-and-developing-your-api/monitoring-your-api/setting-up-monitor/#:~:text=When%20you%20open%20a%20workspace,Started%2C%20then%20Create%20a%20monitor.)) # Mocks If you would like to see example responses and run and interact with the collection without having to input valid credentials, you will need to create a mock server. You can do this by left clicking on your forked version of this integration and selecting ***Mock Collection*** ([learn more about creating a mock server here](https://learning.postman.com/docs/designing-and-developing-your-api/mocking-data/setting-up-mock/)). Then you will also nee to fork the ***[MOCK] Azure Website Management Integration*** environment. Copy the mock server url and assign it to the environment variable ***mockUrl***. If you run the collection with the mock environment selected, it will show you what a successful request looks so long as you fill out the environment variables with the correct variable type.

# Introduction This collection is used to create a repository in Azure Repos, upload a Postman collection to that repository, and commit collection changes to that repository. The intended functionality of this integration is for each copy of this integration to run a separate monitor on a distinct collection that the user wants uploaded to Azure repos. This integration, when paired up with a monitor for a specific collection, can be used to track that specific collection across multiple branches and repos by stating the branch name and repo name in the environment for that monitor. **Note**: This integration does not support renaming files or directories after they have been committed to Azure Repos. If the user would like to change the current `fileName` or `directoryName` to something different after the initial commit to a branch has been made, the user will have to start with a new branch. This integration also does not support pushing to repositories that have been manually created or edited in Azure Repos because manual changes cause the auto-generated variables to not be up-to-date. In order to prevent these errors, please create repositories and make commits through this integration, rather than manual changes. # Prerequisites This collection requires that you have done the following: - Made an account in Azure DevOps - Created an organization in your DevOps account - Created a project in your DevOps organization - Generated a Personal Access Token in your DevOps project (can be generated by going to User Settings, clicking Personal Access Tokens, and clicking New Token) # Monitors In the integration, a monitor is used to automatically check to update the changes made to a collection once a day. In order to make this monitor, input the desired Monitor Name, select the ***Azure Repos Integration*** collection as the Collection. Select the ***Azure Repos*** environment as the environment and select how often you want it to be run to check if your collection has been updated (we recommend once a day). Then, your monitor should be created ([learn more about creating a monitor here](https://learning.postman.com/docs/designing-and-developing-your-api/monitoring-your-api/setting-up-monitor/#:~:text=When%20you%20open%20a%20workspace,Started%2C%20then%20Create%20a%20monitor.)). # Mocks If you would like to see example responses and run and interact with the collection without having to input valid credentials, you will need to create a mock server. You can do this by left clicking on your forked version of this integration and selecting ***Mock Collection*** ([learn more about creating a mock server here](https://learning.postman.com/docs/designing-and-developing-your-api/mocking-data/setting-up-mock/)). Then you will also nee to fork the ***[MOCK] Azure Repos*** environment. Copy the mock server url and assign it to the environment variable ***mockUrl***. If you run the collection with the mock environment selected, it will show you what a successful request looks so long as you fill out the environment variables with the correct variable type. # Workflow Diagram  # Environment Setup To use this collection, the following environment variables must be defined: | Key | Description | Kind | |---|---|---| | `x-api-key` | Your Postman API key ([learn more about the Postman API here](https://learning.postman.com/docs/developer/intro-api/) and [get your Postman API key here](https://go.postman.co/settings/me/api-keys)) | User input | | `collectionUID` | The ID of the Postman collection that you want to upload to Azure Repos (found under the info tab of the collection) | User input | | `collectionContent` | The content of the Postman collection that will be uploaded to Azure Repos | Auto-generated | | `organization` | The name of the organization that you created in your DevOps account | User input | | `project` | The name of the project that you created in your DevOps organization | User input | | `password` | The Personal Access Token that you generated in your DevOps project | User input | | `repositoryId` | The name of the repository that you want to upload to in Azure Repos | User input | | `previousCommitId` | The ID of the previous commit made to the given repository and branch | Auto-generated | | `fileName` | The name of the collection that will be uploaded to Azure Repos | User input or Auto-generated if not specified| | `directoryName` | The name of the directory that commits will be made to in Azure Repos | User input or Auto-generated if not specified | | `branch` | The branch that you want to commit changes to in Azure Repos (default is refs/heads/main if none provided) | User input or Auto-generated if not specified| | `projectId` | The ID of the project that you created in your DevOps organization | Auto-generated | | `changeType` | The type of change that you are making when you make a commit to Azure Repos. Supported change types include: add, edit, delete | Auto-generated | | `baseCollection` | The base URL used to retrieve a Postman collection | Auto-generated | | `baseRepo` | The base URL used to make all calls to Azure Repos | Auto-generated | | `base64Collection` | The base-64 converted version of the Postman collection with the given UID | Auto-generated | **Note**: If the Create Repo and Push Collection requests are run with a branch or repo that has not been created, a new variable with the key "{{Repository Name}}/{{Branch Name}}/previousCommitId" will be auto-generated. For example, if a newly created repository with the name "Testing" has the branch name "refs/heads/main", a new variable with the key "Testing/main/previousCommitId" will appear, which will be used in the Push Collection Request. These variables allow the user to fluidly transition between branches and repositories and are auto-generated, so the user does not have to worry about manually changing these variables. Additionally, when the Get Collection request is run with a branch or repo that has not been created, a new variable with the key "{{Repository Name}}/{{Branch Name}}/collectionContent" will be auto-generated. These variables allow the integration to upload different Postman collections to the given branch and repository names. Furthermore, if the Get Collection request fails because a Postman collection is deleted, the corresponding file on Azure Repos is deleted. To track whether the file has been deleted, a new variable with the key "{{Repository Name}}/{{Branch Name}}/collectionDeleted" will be auto-generated in the environment. When there is a commit to create a file, this variable will be set to "Not Deleted". If the Delete Collection request is run, this variable will be set to "Deleted". # Branches To specify a particular branch that you want to push to, fill in the `branch` environment variable using the format "refs/heads/{{Branch Name}}". For example, if you wanted to push to a branch named "second", `branch` would be set to "refs/heads/second".

This is a generated connector from [Azure OpenAI Completions API v2023-03-15-preview](https://learn.microsoft.com/en-us/azure/cognitive-services/openai/reference#completions/) OpenAPI specification. The Azure Azure OpenAI Service REST API Completions Endpoint will generate one or more predicted completions based on a provided prompt. The service can also return the probabilities of alternative tokens at each position.

The requests in this collection use the Postman API, if you don't already have an API key, you can generate it by following the instructions [here](https://learning.postman.com/docs/developer/intro-api/#generating-a-postman-api-key).

This is a generated connector from [Azure IoT Hub API v1.0](https://docs.microsoft.com/en-us/rest/api/iothub/) OpenAPI specification. Azure IoT Hub is a service that offers programmatic access to the device, messaging, and job services, as well as the resource provider, in IoT Hub. You can access messaging services from within an IoT service running in Azure, or directly over the Internet from any application that can send an HTTPS request and receive an HTTPS response. Use this API to manage the IoT hubs in your Azure subscription.

APIs for fine-tuning and managing deployments of OpenAI models.

# Introduction This collection is intended to recreate API requests (GET, POST, PUT, and DELETE) from Azure logs within a specified collection by the user. ## Setup To run the collection, you need the following setup complete: 1. This collection requires that you have a Postman API key ([learn more about the Postman API here](https://learning.postman.com/docs/developer/intro-api/) and [get your Postman API key here](https://go.postman.co/settings/me/api-keys)) 2. API Management, Blob Storage Setup : - Resource Group Name ([learn more about the Azure Resource groups here](https://docs.microsoft.com/en-us/azure/azure-resource-manager/management/manage-resource-groups-portal)) - Service Name (Note: This should be an API management service ([learn more about the Azure API management service here](https://azure.microsoft.com/en-us/services/api-management/))) - Client ID, Client Secret and Tenant ID (These are associated with the target Azure application ([learn more about creating Azure Apps and viewing app details here](https://docs.microsoft.com/en-us/azure/active-directory/develop/howto-create-service-principal-portal))) - In order to start, you must have created an API Management Integration and an Application ([learn more about creating an Application here](https://docs.microsoft.com/en-us/azure/active-directory/develop/howto-create-service-principal-portal)). You must connect the API Management Integration with the created Application. Use the Application/Client ID from your created Application for this ([learn about how to do this here](https://docs.microsoft.com/en-us/azure/active-directory/develop/howto-create-service-principal-portal)). - When creating the Oauth2 Service within the API Management Integration Service, put any placeholder url for the "Client registration page URL" (as a dummy variable, we recommend [https://placeholder.com]()). Make sure to check Implicit for Authorization grant types. For Authorization endpoint URL, go to the endpoints page of the App Registration you created and use the "OAuth 2.0 authorization endpoint (v2)" URL given and go to the same place for the Token endpoint URL and use the "OAuth 2.0 token endpoint (v2)" URL. Make sure to check POST in the "Authorization request method." For client credentials, add the Client ID of the App Registration you have been using. - You also must add a role for the App Registration you just created in your API Management Service. Under the API Management Service you created, go under Access Control (IAM) and add a role assignment of "Contributor", assigning access to "User, group, or service principle" and your App Registration by name. - You must add a role for the App Registration you just created in your Blob Storage Account. Under the Blob Storage Account you created, go under Access Control (IAM) and add a role assignment of "Contributor", assigning access to "User, group, or service principle" and your App Registration by name. - The created Azure Application should be connected to the Azure API Management Service and the Azure Blob Storage Service. (if you don't want them to be connected to the same Application, create separate environment variables for the Client ID, Client Secret and Tenant ID for both services) Next, within the API to be tracked within API Management, click on "Operations". Within "All Operations", go to the section named "Inbound Processing" and click on "Add Policy" and insert the following snipped of code with {BlobStorageAccountName} and {ContainerName} replaced with your storage account name and the container where you want to store the API Logs. (Due to markdown issues, view the code with better formatting by pasting code in an online xml formatter such as https://codebeautify.org/xmlviewer) ``` <policies> <inbound> <authentication-managed-identity resource="https://storage.azure.com/" output-token-variable-name="msi-access-token" ignore-error="false" /> <!-- Send the PUT request with metadata --> <send-request mode="new" response-variable-name="result" timeout="300" ignore-error="false"> <!-- Get variables to configure your: storageaccount, destination container and file name with extension --> <set-url>@( string.Join("", "https://{BlobStorageAccountName}.blob.core.windows.net/{ContainerName}/", context.RequestId, ".json") )</set-url> <set-method>PUT</set-method> <set-header name="Host" exists-action="override"> <value>{BlobStorageAccountName}.blob.core.windows.net</value> </set-header> <set-header name="X-Ms-Blob-Type" exists-action="override"> <value>BlockBlob</value> </set-header> <set-header name="X-Ms-Blob-Cache-Control" exists-action="override"> <value /> </set-header> <set-header name="X-Ms-Blob-Content-Disposition" exists-action="override"> <value /> </set-header> <set-header name="X-Ms-Blob-Content-Encoding" exists-action="override"> <value /> </set-header> <set-header name="User-Agent" exists-action="override"> <value>sample</value> </set-header> <set-header name="X-Ms-Blob-Content-Language" exists-action="override"> <value /> </set-header> <set-header name="X-Ms-Client-Request-Id" exists-action="override"> <value>@{ return Guid.NewGuid().ToString(); }</value> </set-header> <set-header name="X-Ms-Version" exists-action="override"> <value>2019-12-12</value> </set-header> <set-header name="Accept" exists-action="override"> <value>application/json</value> </set-header> <!-- Set the header with authorization bearer token that was previously requested --> <set-header name="Authorization" exists-action="override"> <value>@("Bearer " + (string)context.Variables["msi-access-token"])</value> </set-header> <!-- Set the file content from the original request body data --> <set-body>@{var url = String.Format("{0} {1} {2}", context.Request.Method, context.Request.OriginalUrl, context.Request.Url.Path + context.Request.Url.QueryString); var status = String.Format("{0}",context.Response.StatusCode); var headers = context.Request.Headers; Dictionary<string, string> contextProperties = new Dictionary<string, string>(); foreach (var h in headers) { contextProperties.Add(string.Format("{0}", h.Key), String.Join(", ", h.Value)); } var body = context.Request.Body; if (body != null){ var requestLogMessage = new { Headers = contextProperties, Body = context.Request.Body.As<string>(), Url = url }; return JsonConvert.SerializeObject(requestLogMessage); } else { var requestLogMessage = new { Headers = contextProperties, Url = url }; return JsonConvert.SerializeObject(requestLogMessage); }}</set-body> </send-request> <!-- Returns directly the response from the storage account --> <return-response response-variable-name="result" /> <base /> </inbound> <backend> <base /> </backend> <outbound> <base /> </outbound> <on-error> <base /> </on-error> </policies> ``` ## Forking Collections In order to use this integration, you will need to fork this collection. You can do this by left clicking on a collection and pressing create a fork. You will also need to fork the environment named ***Azure Log Collection*** ([learn more about forking collections and environments here](https://learning.postman.com/docs/collaborating-in-postman/version-control-for-collections/#forking-a-collection)). ## Monitors In the integration, a monitor is used to automatically check updates made to the specified Log Group once a day and create a new collection including the updated requests (since Postman does not currently support modifying pre-existing collections through their API). In order to make this monitor, input the desired Monitor Name, select the ***Azure Log Collection*** collection as the Collection. Select the ***Azure Log Collection*** environment as the environment and select how often you want it to be run to check if your logs have been updated (we recommend once a day). Then, your monitor should be created ([learn more about creating a monitor here](https://learning.postman.com/docs/designing-and-developing-your-api/monitoring-your-api/setting-up-monitor/#:~:text=When%20you%20open%20a%20workspace,Started%2C%20then%20Create%20a%20monitor.)). ## Mocks If you would like to see example responses and run and interact with the collection without having to input valid credentials, you will need to create a mock server. You can do this by left clicking on your forked version of this integration and selecting ***Mock Collection*** ([learn more about creating a mock server here](https://learning.postman.com/docs/designing-and-developing-your-api/mocking-data/setting-up-mock/)). Then you will also nee to fork the ***[MOCK] Azure Log Collection*** environment. Copy the mock server url and assign it to the environment variable ***mockUrl***. If you run the collection with the mock environment selected, it will show you what a successful request looks so long as you fill out the environment variables with the correct variable type. ## Environment Setup To use this collection, the following environment variables must be defined: | Key | Description | Kind | |---|---|---| | `x-api-key` | Your Postman API key ([learn more about the Postman API here](https://learning.postman.com/docs/developer/intro-api/) and [get your Postman API key here](https://go.postman.co/settings/me/api-keys)) | User input | | `subscriptionId` | Your Azure subscription ID | User input | |`tenantId`| The ID of your Active Directory (AD) in Azure. | User input | |`clientId`| Client ID of Azure app (found in Azure app registrations). | User input | |`clientSecret`| Client Secret of Azure app (found in Azure app registrations).| User input | |`bearerToken`| Access token for authentication. | Auto-generated | |`resourceGroupName`| Name of resource group created on Azure.| User input | |`serviceName`| Name of API Management Instance created on Azure.| User input | | `apiId` | The ID of your API (found under the API details tab) | User input | |`baseUrl`| The base URL used to generate a bearer token required for various requests | Auto-generated | |`containerName`|The name of the blob storage container where logs are stored. (Should match up with value in XML script.)| User Input | |`storageName`|Name of Azure storage account.| User Input | |`sharedAccessSignature`|SAS Token Generated from Blob Storage. (Note, append a "?" to the SAS.) | User Input | |`workspaceID`| ID of Postman Workspace. | User Input | |`blobList`, `blobName`, `logItems`, `blobListJSON`| Autogenerated variables to parse and iterate through logs. | Auto-generated |

This is a generated connector from [Azure IoT Central API v1.0](https://azure.microsoft.com/en-us/services/iot-central/) OpenAPI specification. Azure IoT Central is a service that makes it easy to connect, monitor, and manage your IoT devices at scale.

This collection recommends which Caches Clusters in AWS ElastiCache are inactive in a given range of time with few externally specified constraints. It uses CloudWatch to get the statistics of clusters and currently is based on 3 metrics: * CacheHits * CPUUtilization * CurrItems Setup ==================== You need to set the following environment variables: 1. AWS Credentials a. `accessKeyID` b. `secretAccessKey` 2. `slackWebHookURL` *Description: Collection notifies its results on this webhook.* 3. `region` *Description: AWS region to monitor - Ex: "us-east-1"* 4. `days` *Description: Range to monitor clusters starting from today to back N days* 5. `period` *Description: Time interval in which AWS collects data points and aggregates them. Ex: 36000 (seconds)* Miscellaneous ==================== 1. AWS allows **1440 data points** in a single request. Either make period long or days short so that data points aggregated are less than their limit. 2. Parameters below have **default values** in case not mentioned in the environment. You can modify these in the environment if required. However, these are recommended. <table> <tr> <th>Variable Name</th> <th>Default Value</th> </tr> <tr> <td>days</td> <td>14</td> </tr> <tr> <td>period</td> <td>36000</td> </tr> <tr> <td>region</td> <td>us-east-1</td> </tr> </table>

This Open API specification serves as a standardized framework for describing and defining the capabilities of the Azure. It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

The Azure Blockchain Workbench REST API is a Workbench extensibility point, which allows developers to create and manage blockchain applications, manage users and organizations within a consortium, integrate blockchain applications into services and platforms, perform transactions on a blockchain, and retrieve transactional and contract data from a blockchain. Contact Support: Name: No Contact Email: email@example.com

This Open API specification serves as a standardized framework for describing and defining the capabilities of the AWS s3. It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

This Open API specification serves as a standardized framework for describing and defining the capabilities of the AWS Recommendation (Third Party). It outlines how clients can interact with the API, providing a structured approach to document endpoints, operations, and other integration details. This specification is intended to promote clarity, consistency, and ease of use for developers and consumers of the API, ensuring efficient communication between systems.

This is a generated connector for [Azure Anomaly Detector API v1](https://azure.microsoft.com/en-us/services/cognitive-services/anomaly-detector/) OpenAPI specification. "The Anomaly Detection service detects anomalies automatically in time\ \ series data. It supports several functionalities, \r\none is for detecting the\ \ whole series with model trained by the time series, another is detecting the\ \ last point \r\nwith model trained by points before. With this service, business\ \ customers can discover incidents and establish \r\na logic flow for root cause\ \ analysis. We also provide change point detection, which is another common scenario\ \ on \r\ntime series analysis and service monitoring. Change point detection targets\ \ to discover tend changes in the time series.\r\nTo ensure online service quality\ \ is one of the main reasons we developed this service. Our team is dedicated\ \ to \r\ncontinuing to improve the anomaly detection service to provide precise\ \ results."

A simple collection to monitor RDS Instances. Auditing environments involves the following steps: 1. Fetch all instances using the provided access key id and secret token. 2. If there are additional instances to be fetched beyond the response of the first fetch request, extract the pagination token from the response and use it to repeat the fetch request. 3. Once there are no more instances to be fetched, save the list of instances as an environment variable. 4. Iterate over the list, repeating the configuration description request for each instance in the list. Once the config for an instance is known, compare it to a set of expected results to ensure compliance. PS: This collection is most useful when run as a [monitor](https://www.getpostman.com/docs/postman/monitors/monitoring_apis_websites), so as to run such audits on a periodic basis. You can also configure the inbuilt Slack integration for Postman monitors, so as to recieve instance alerts when things are amiss. # Required environment variables: This collection requires the following environment variables: | Name | Description | Required | |:----------:|:----------------------------------------------------------------------------:|:--------:| | id | The access key id for the audit AWS user | Yes | | key | The secret access key for the audit AWS user | Yes | | awsRegion | The region to audit environments in. Defaults to us-east-1 | No | | maxRecords | The number of environments to retrieve per fetch call. Defaults to 100 (max) | No |

Contact Support: Name: No Contact Email: email@example.com

A simple collection to monitor RDS Instances. Auditing environments involves the following steps: 1. Fetch all instances using the provided access key id and secret token. 2. If there are additional instances to be fetched beyond the response of the first fetch request, extract the pagination token from the response and use it to repeat the fetch request. 3. Once there are no more instances to be fetched, save the list of instances as an environment variable. 4. Iterate over the list, repeating the configuration description request for each instance in the list. Once the config for an instance is known, compare it to a set of expected results to ensure compliance. PS: This collection is most useful when run as a [monitor](https://www.getpostman.com/docs/postman/monitors/monitoring_apis_websites), so as to run such audits on a periodic basis. You can also configure the inbuilt Slack integration for Postman monitors, so as to recieve instance alerts when things are amiss. # Required environment variables: This collection requires the following environment variables: | Name | Description | Required | |:----------:|:----------------------------------------------------------------------------:|:--------:| | id | The access key id for the audit AWS user | Yes | | key | The secret access key for the audit AWS user | Yes | | awsRegion | The region to audit environments in. Defaults to us-east-1 | No | | maxRecords | The number of environments to retrieve per fetch call. Defaults to 100 (max) | No |

Contact Support: Name: No Contact Email: email@example.com

## Description The collection make use of REST API exposed by AWS IAM to fetch data related to IAM entities and their access to AWS resources. The collection processes fetched data using “Pre-request & Post-requests Tests” to detect nonalignment with the AWS IAM audit checklist. The audit result is pushed to Slack for further actions for DevOps Team. --- ## Environment Variables | Environment Key | Value | | - | - | | aws_access_key_id | Read: https://docs.aws.amazon.com/general/latest/gr/aws-sec-cred-types.html#access-keys-and-secret-access-keys | | aws_secret_access_key | Read: https://docs.aws.amazon.com/general/latest/gr/aws-sec-cred-types.html#access-keys-and-secret-access-keys | | slack_url | Read: https://api.slack.com/incoming-webhooks | --- **IAM Audit Checklist** ``` 1. Check if root user access keys are disabled. 2. Check if the strong password policy is set for an AWS account. Minimum password length (10) Require at least one lowercase letter Require at least one number Require at least one non-alphanumeric character Allow users to change their own password Enable password expiration (365 days) Prevent password reuse (24) 3. Identify IAM users(humans) whose active access keys are not being rotated for every 45 days. 4. Identify if IAM users(bots) whose active access keys are not being rotated for every 180 days. 5. Identify IAM users(humans) who are inactive for more than 180 days. 6. Identify IAM users(bots) who are inactive for more than 180 days. 7. Check if the MFA is enabled for IAM users(humans). 8. Identify all the permissive policies attached to the roles. Permissive policies : where the value of “Principle” or "Action" is “ * ” (wildcard character). 9. Identify unused and not recently used (not last accessed in 180 days) permissions provisioned via policies attached with IAM entity (user, group, role, or policy) using service Last Accessed Data. ```